Everyone and their dog has at least heard of Neural Networks. However, knowing all the different kinds of models and why they even exist, requires far more research into the subject.

I first heard about Spiking Neural Networks late in 2017, in a final-year project proposal during my time at King’s College London. That project sounded amazing to me, and even if I barely had an idea of what a neural network was, I was up for the challenge. During the following 7 months, I worked on a paper proposed by my supervisor and successfully managed to implement it. Actually, the realisation of the project had some up and downs, many challenges, and I’m proud to say many innovative solutions on my end. But what it’s interesting is that now, about 5 months after submitting my dissertation, I’m still working on the project, but this time, as a collaborator of the research team.

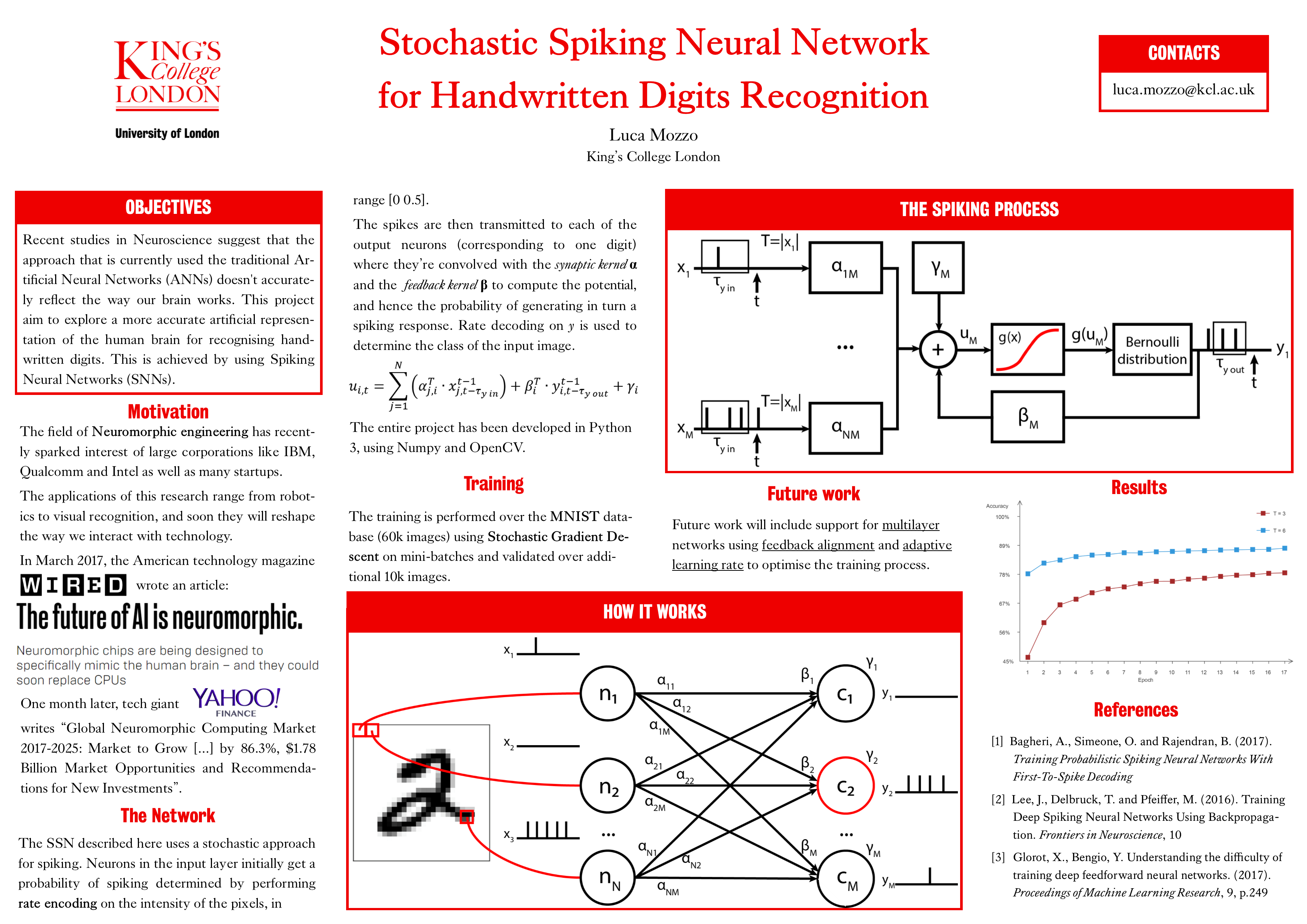

Below, a brief and high-level overview of SNNs.

Introduction

Spiking Neural Networks (SNNs) are relatively new in the field. I said relatively because their discovery dates back to the 60s, but the computational power of machines at the time was not enough to implement a prototype. SNNs belong to the third generation of neural networks because they introduce the concept of time, but the differences are way more. If we think about the traditional neural networks (that I will call NN from now on), they are designed around humans. Indeed, there’s no such thing as floating point numbers in the neurotransmitters of our brains. SNNs want to be more biologically-centred, a close representation of the human brain. In the next chapter, I will explain how.

ANNs vs SNNs

Instead of explaining the differences in a prosaic and inconvenient manner, I choose the bullet points approach:

- SNNs have the concept of time – a run of a feedforward ANN is instantaneous, every neuron transmits a single value for every inference task. Conversely, spiking neurons are observed over an arbitrary number of units of time. This implies that every neuron may fire more than once for every inference task.

- Spiking neurons have a completely different structure – In SNNs, neurons no longer fire at every instant. During an inference or training task, a neuron may not fire at all. The concept of firing (or transmitting spikes to the connected neurons) comes from the fact that every neuron has a membrane potential, that every time a spike is received as input, will increase and decay with time. Once the potential threshold is reached, the neuron emits a spike to all the connected neurons in the following layer.

- SNNs transmit spikes, ANNs transmit numbers – When the neuron fires, it no longer transmits a floating point number, but a spike. A spike is an electric signal which encodes information based on the neuron that emits it as well as the time at which the spike is emitted.

Motivation – Why Spiking Neural Networks

If so many papers have been published in recent years, there must be a reason.

The fact that SNNs are closer to the natural neural networks is a good motivation itself, reverse engineering is the best approach to reproduce our brain (or some functionalities of it) and even if the current results do not overtake the state-of-the-art ANN accuracies yet, they most likely will in the future.

If it’s not enough, the field of neuromorphic engineering is concerned with the implementation of neural networks in electronic circuits. Most of the industry leaders already have a ready prototype, with the great advantage of natively performing learning operations in hardware at a higher speed and lower energy consumption. These two advantages solve 2 main problems and obstacles of neural computation in recent times: the time and computational power needed to train a network, and the energy required to do it. Because spiking neurons transmit spikes (so a 0-1 signal) and do not necessarily emit a spike at every iteration (only when the potential threshold is reached), less energy is required to operate.

And now?

This was just a high-level introduction to the topic, that people who already know SNNs may find trivial and unnecessary, but it took me a great deal of time when writing my dissertation. I thought it needed to have a convincing motivation, which is not so easily found in academic publications.

I plan in the future to add some more articles on the implementation and specific model I’m using in my work, and what are the main challenges at the moment.